Why AI Image Editing Is Often Rebuilding

AI can make true pixel edits, but many image “fixes” work by reconstruction. Typography and colourisation expose where rebuilding replaces editing.

How colour, text, and “fixes” revealed what generative images really are

It started, as these things often do, with something that felt right at first glance.

I shared a colourised version of a famous photograph of The Hague from around 1860. A quiet street, soft tones, a sense of calm. It looked like a pleasant summer day.

Only later did I notice something odd.

The trees were bare.

A summer that was not there

The impression of summer came entirely from colour. Greenish hues suggested foliage, warmth, life. But when I looked more carefully, there were no leaves at all. Just branches.

The colourisation had not lied outright, but it had implied. It filled in what usually makes sense, not what was actually present.

At that point, this still felt like a limitation of colourisation. Interesting, but contained.

Where text breaks the spell

Then someone commented that they had tried to read the street sign.

2. AFD, Hooge Nieuwstraat.

In the colourised image, the sign had become unreadable. Random glyphs, shapes that resemble letters but form no words. In the original black-and-white photograph, the same sign is still legible to a human eye. Not perfectly crisp, but recognisable.

This was the first moment where the problem no longer felt cosmetic.

If the information was there in the original, why was it lost?

“It rebuilds the image”

Someone replied, almost casually: an AI does not really colour in an image. It rebuilds the photo entirely. What you see is a generated image that resembles the original as closely as possible.

That sentence stuck.

If that were true, a lot of things suddenly made sense. The invented sense of season. The loss of typographic fidelity. The overall plausibility paired with local incoherence.

But I did not yet know it. So I started testing.

Den Haag, zomerdag 165 jaar geleden.

— Rob Hoeijmakers (@robhoeij) December 8, 2025

Beetje kleur aangebracht. Je ziet beter dat de zon schijnt en het laat het Mauritshuis mooi uitkomen. https://t.co/MI70qVIzEZ pic.twitter.com/K3yW1xgPH7

Famous images behave differently

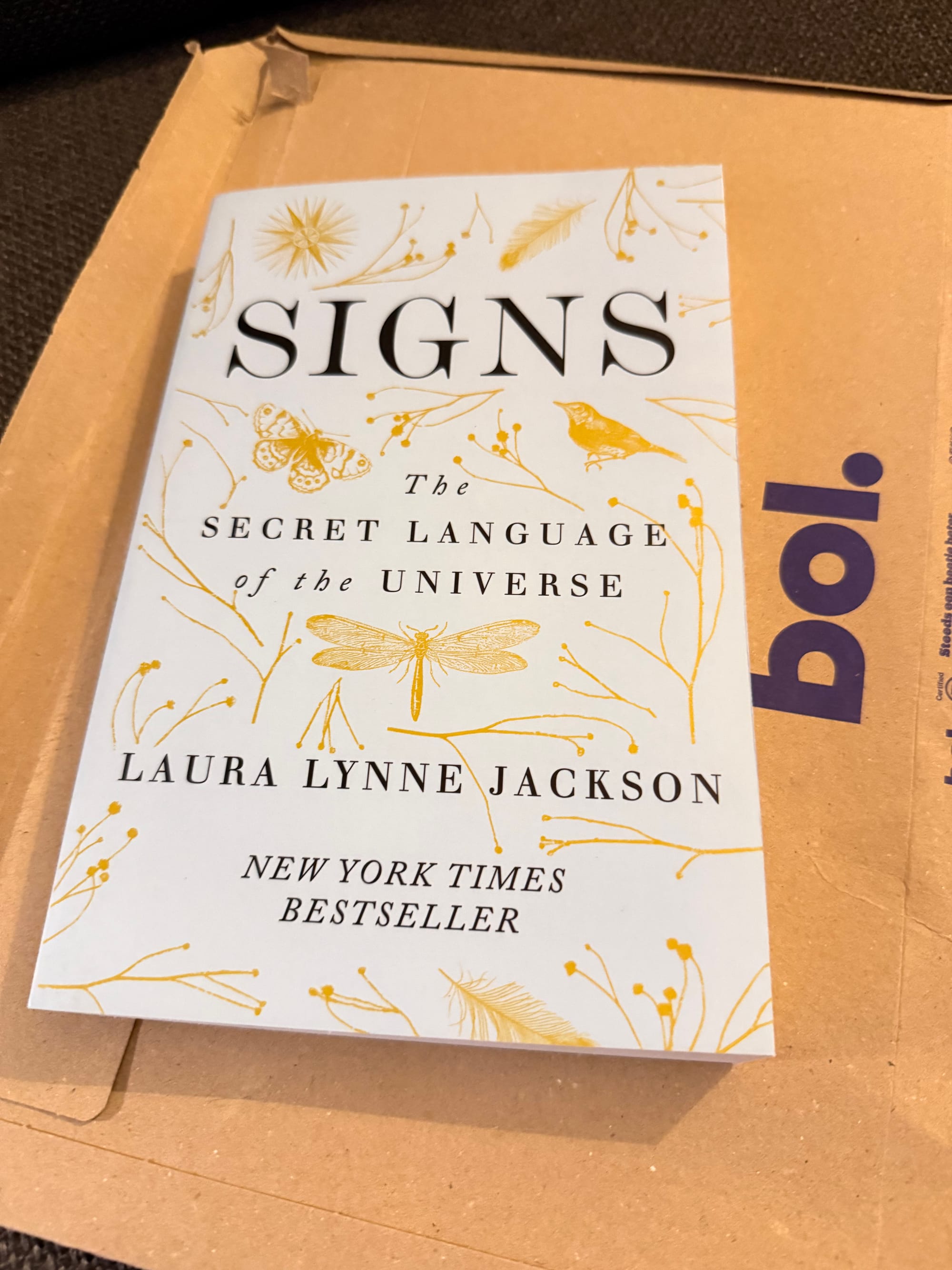

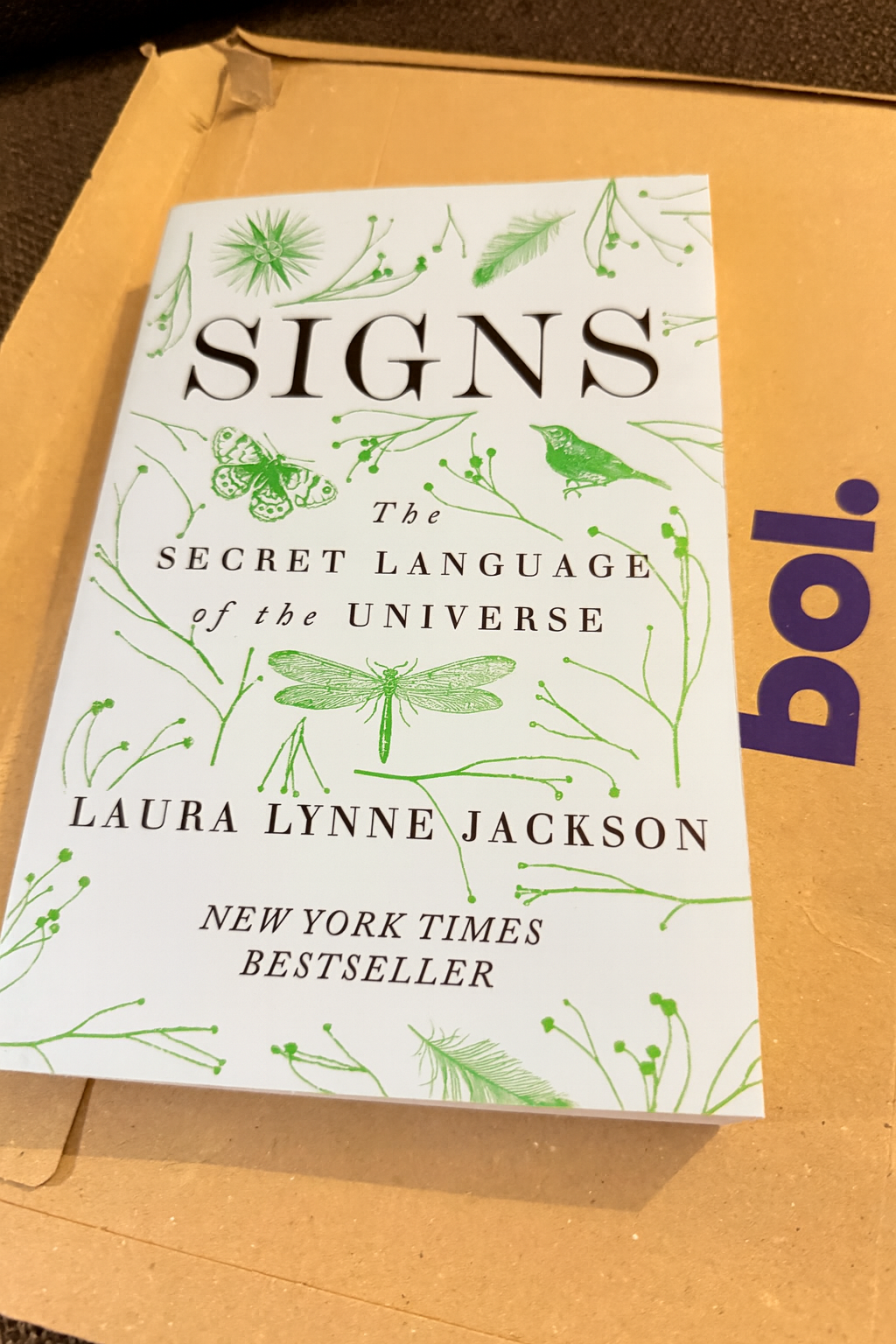

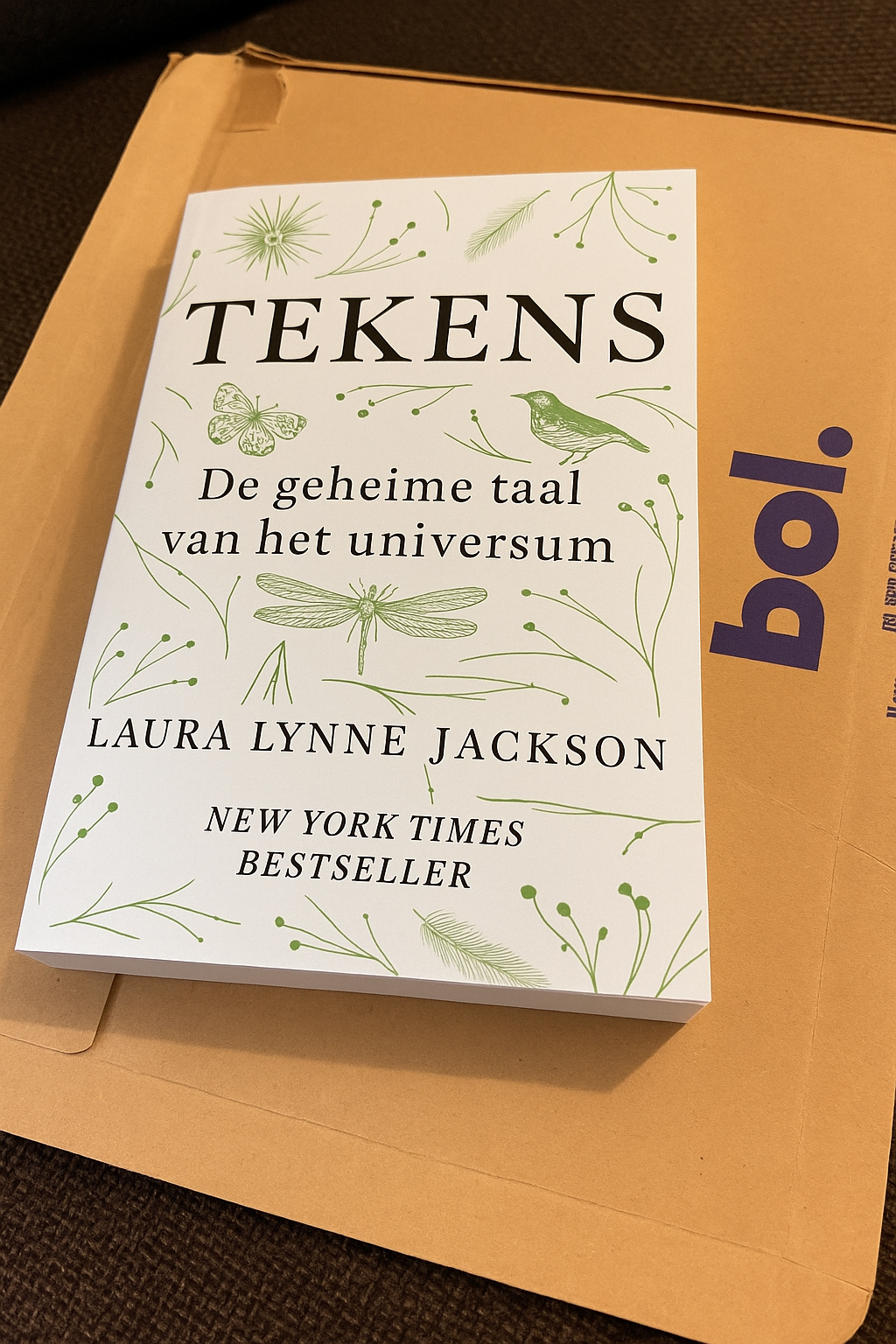

I shared an image of a well-known book with ChatGPT and began experimenting. First, I asked for a small edit. Almost nothing seemed to change. Then I asked for a translation of the text.

This time, the result was strikingly coherent.

At one point, ChatGPT effectively recognised the book. It was not merely extracting text from the image, but identifying the object itself and drawing on prior knowledge to rebuild it accordingly.

That moment was revealing. The system was not reading the text purely from pixels. Recognition anchored the reconstruction.

Editing appeared to work here because the image belonged to a familiar visual and cultural pattern.

The original photo, the true edit from yellow to green, and the regenerated translation. Check out the change of font type.

When recognition disappears

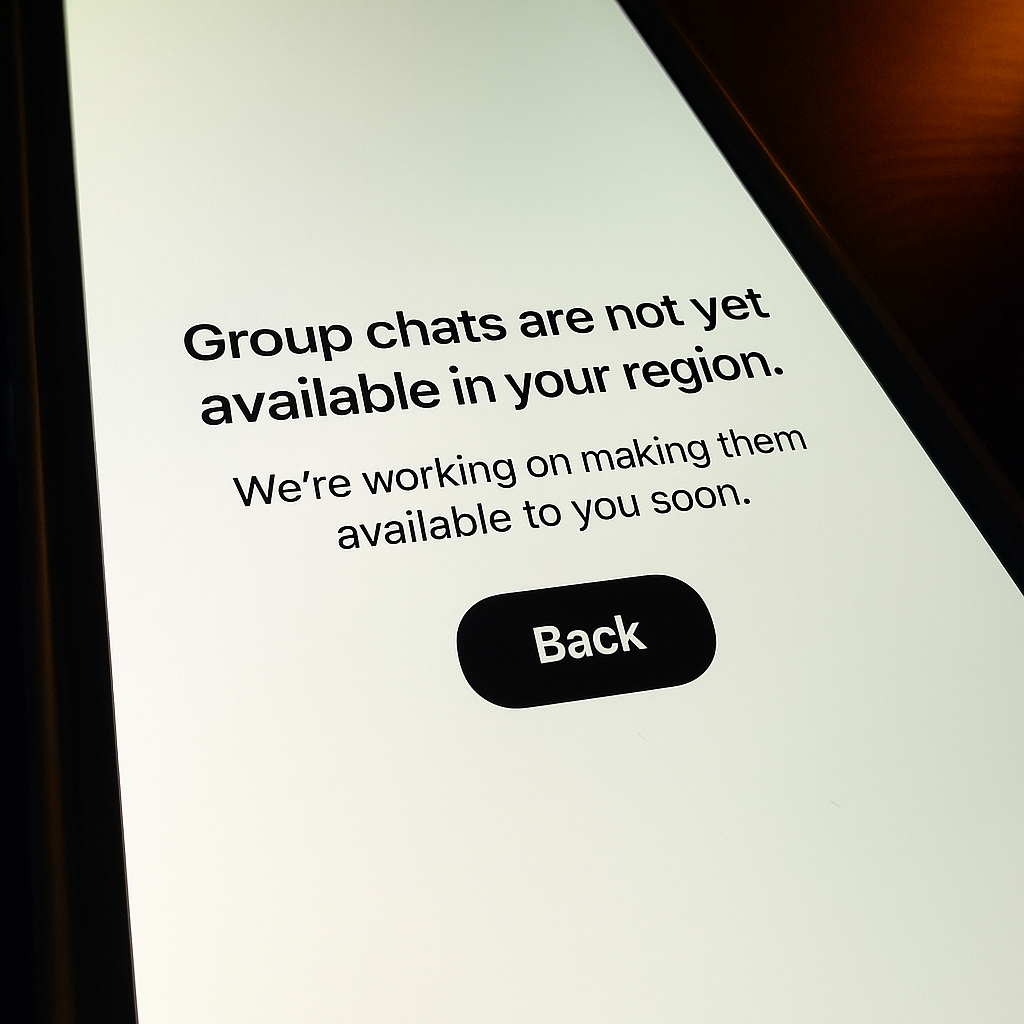

To remove that anchor, I changed the input. I took a photograph myself: a screen, shot at an angle, with reflections and distortion. No known layout. No shared cultural reference.

Convincing at a glance, but wrong in the details: the image is regenerated, not edited, losing pixel noise, moiree patterns, precise perspective, and the fidelity of that specific screen and moment.

A human reader can still make sense of such an image. We are remarkably tolerant of noise, perspective, and imperfection.

When ChatGPT regenerated the image, the result was visibly weaker. Layout drifted. Text degraded. The seams became obvious.

This contrast was decisive.

The system was not preserving the original image and modifying it. It was rebuilding it, and it did so far more effectively when it already “knew” what it was looking at.

Typography as a diagnostic tool

Typography turned out to be the clearest signal throughout this process.

Letters are not textures. They are symbols. Humans can tolerate blur and distortion and still recognise them. Generative image models, trained primarily on visual similarity, cannot reliably preserve symbolic correctness unless the text is already familiar or explicitly generated as text.

That is why typography collapses first. It is where regeneration reveals itself most clearly.

What “editing” usually means now

By this point, the pattern had stabilised.

Most consumer-facing AI image tools do not edit images in the traditional sense. They interpret an image, combine it with a prompt, and generate a new image that fits both. Sometimes that new image is almost identical. Sometimes it diverges in telling ways.

True pixel-preserving edits exist, but they are the exception. Regeneration is the default because it is flexible, powerful, and forgiving.

Once you see that, the confusion lifts.

Here is colorisation where the image seems to be edited rather than regenerated.

Learning to see the machine

What changed for me was not my opinion of these tools, but my way of looking at their output.

I stopped asking why something was wrong, and started noticing where the system was guessing. I could see when it was anchored in recognition, and when it was improvising.

That shift, from frustration to understanding, is quiet but profound.

It is not about demystifying AI. It is about aligning our expectations with the kind of machine we are actually dealing with.

And once that alignment happens, the images stop being misleading. They become instructive.

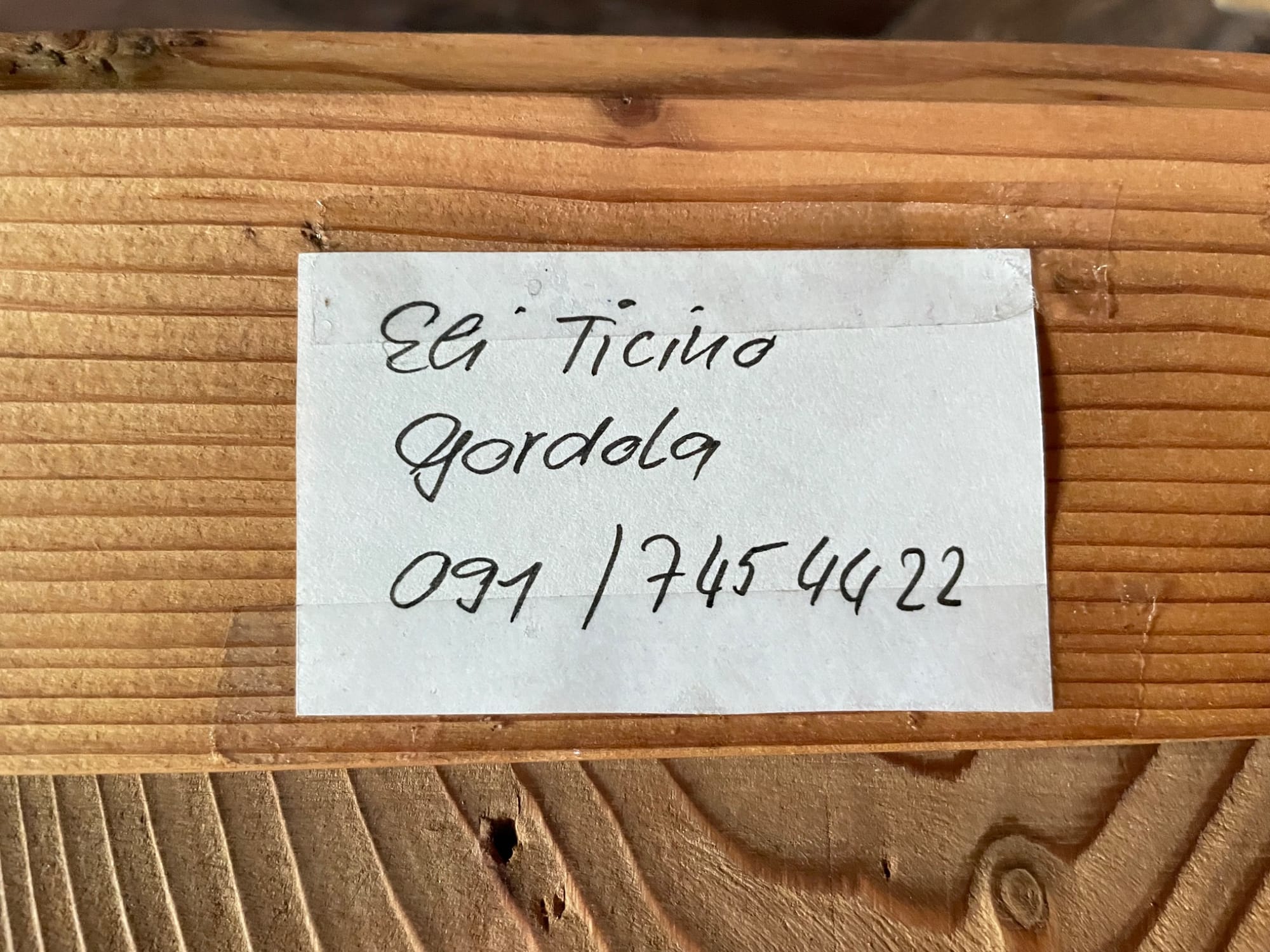

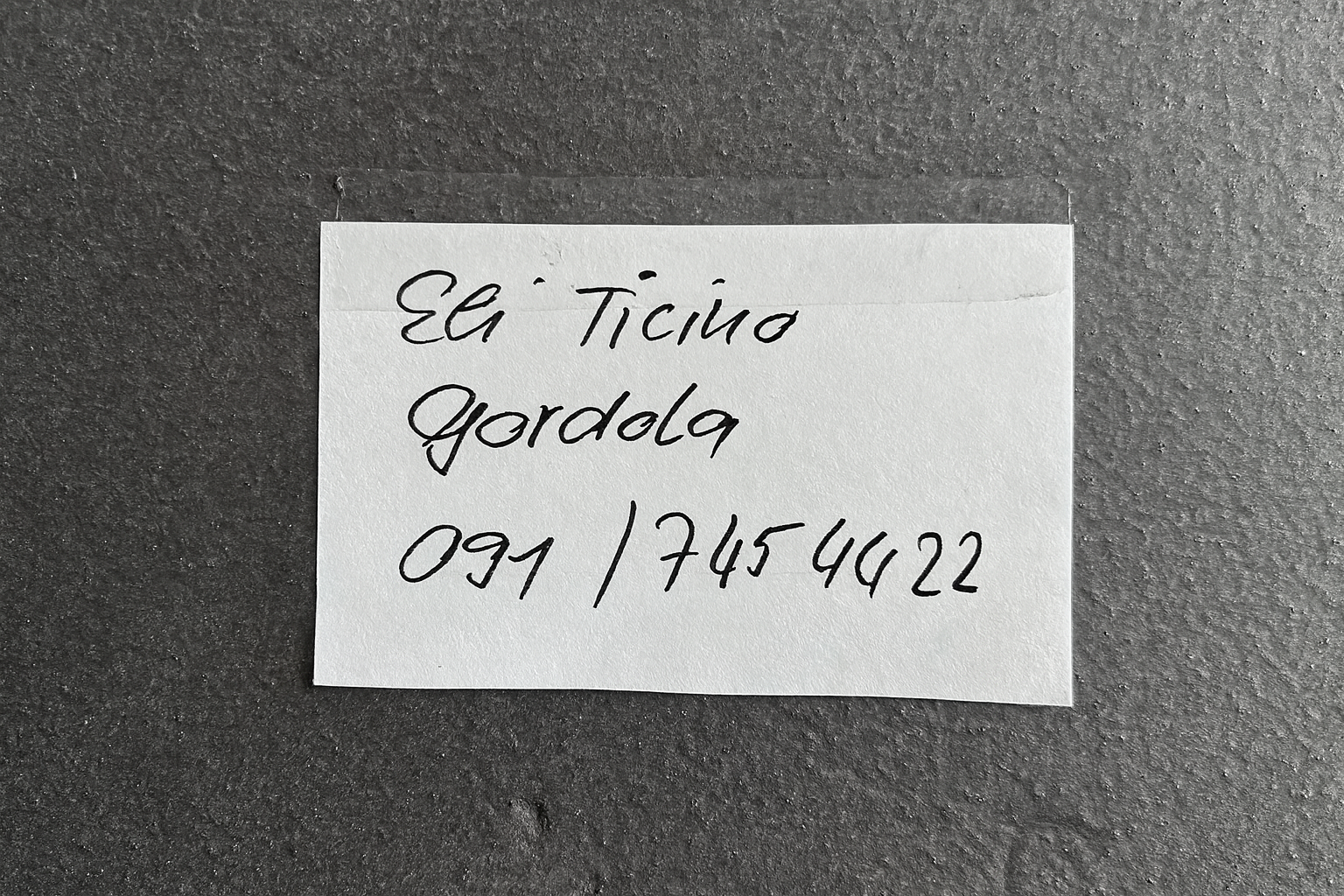

Here I tested ChatGPT and asked it to change from wood to metal. As you can see it carried on the handwritten note (but lost the second bit of Scotch).