Flying Blind: Measuring Traffic When Your Readers Are Machines

As readers move into ChatGPT, Perplexity and Google’s AI Overviews, we lose sight of them. Can Cloudflare and Plausible help us measure what’s missing?

Youp van der Graaf, a freelance data and CX consultant with long experience in analytics and experimentation, asked how we can still measure website traffic when so much reading now happens inside language models. Chris Schneemann had raised similar concerns from his CX practice. Their questions landed because my own dashboards were quiet, yet my writing clearly circulated somewhere beyond them.

For months, I felt like a pilot flying at night with the sky full of signals, but no instruments that showed where they were going.

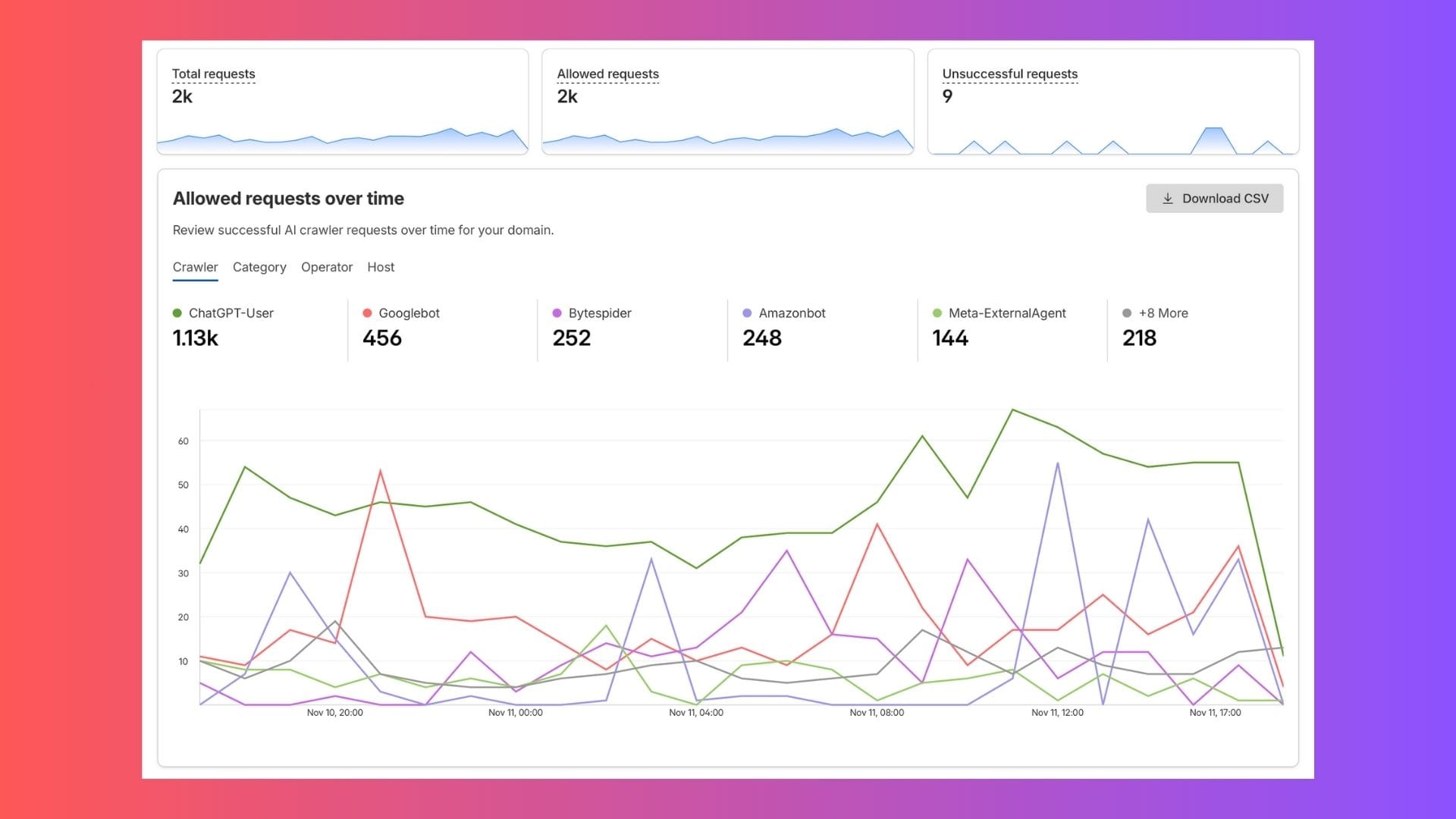

Then I opened Cloudflare and saw something new: lines of traffic labelled ChatGPT-User, GPTBot, PerplexityBot.

Suddenly the invisible became faintly visible.

Quick takeaways

- A growing share of online reading now happens within AI systems, unseen by traditional analytics.

- There are three forms of AI interaction: training crawls, conversational mentions, and human click-throughs.

- The new attention economy is dominated by ChatGPT, Perplexity, and Google’s AI Overviews.

- Cloudflare’s bot analytics offer the first faint radar of this new airspace.

- We need measurement tools that can operate without cookies, scripts, or clicks.

The discovery

In my earlier pieces, The End of Google Search as We Know It and When Bots Become Readers, I wrote about the shift from discoverability to readability.

Google’s role as the great referrer was already eroding, and AI crawlers had begun to treat blogs as training material rather than destinations.

This time the story became tangible.

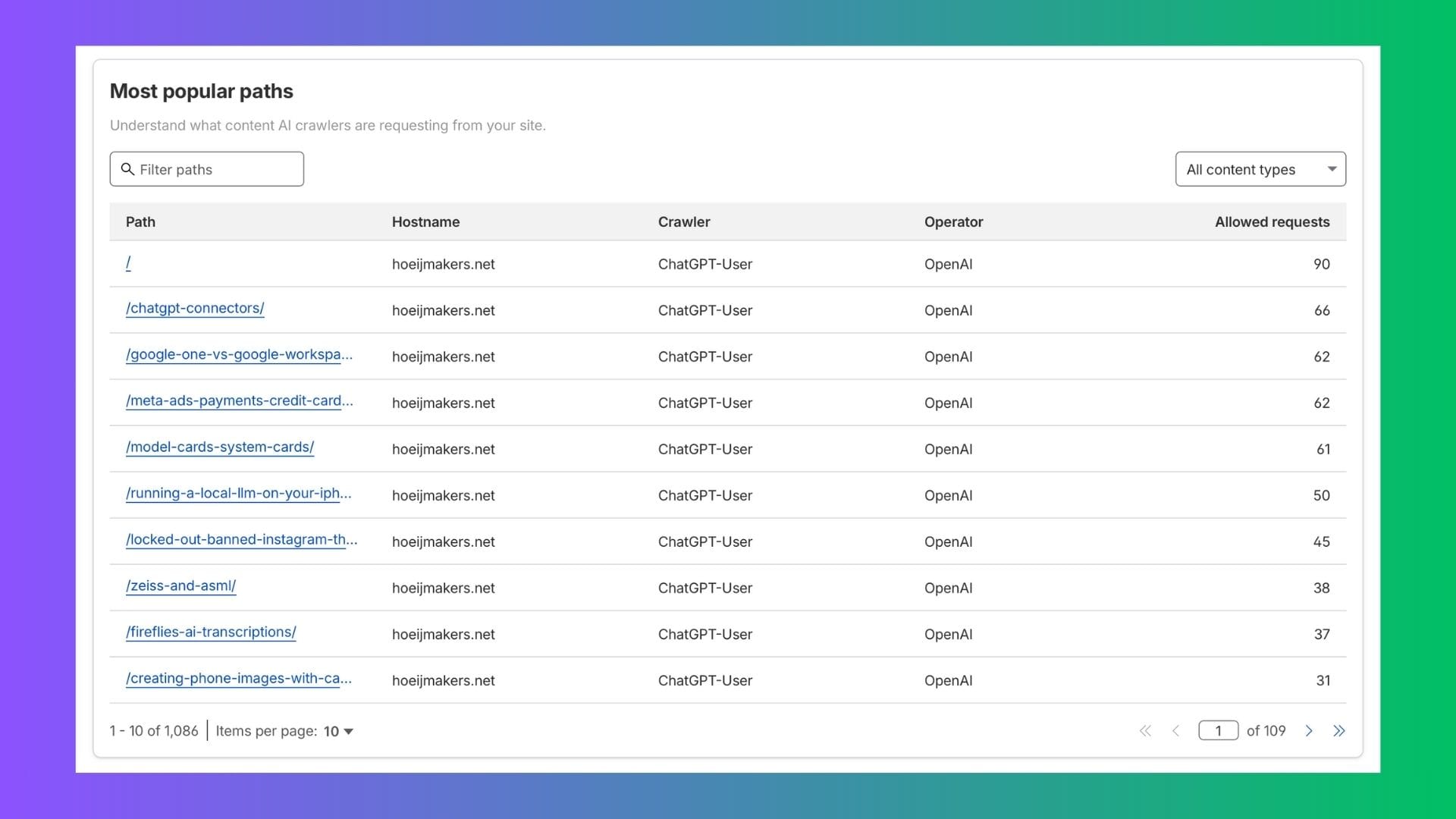

In Cloudflare’s new AI Crawl Control dashboard, I watched my site being accessed hundreds of times a day by ChatGPT-User.

Not the training bot (GPTBot), but the live agent, a sign that real people, through ChatGPT, were drawing on my writing for answers.

Plausible, my privacy-first analytics tool, showed nothing. Because there was no browser, no JavaScript, no session.

Just raw retrieval: a machine fetching text to interpret for someone else.

Three kinds of interaction and only one we can see

When we talk about “AI traffic”, we’re actually talking about three different layers of activity:

- Training exposure – when large language models crawl or scrape your site to learn from it.

Done by bots likeGPTBot,ClaudeBot, orCCBot.

It leaves traces in Cloudflare, but it’s not tied to any user or conversation.

Your content becomes part of a statistical memory, a silent ingestion. - Mention in conversation – when your work is quoted, paraphrased, or referenced inside an AI chat.

The model may recall it from training or fetch it briefly in real time.

You won’t see it. There’s no referrer, no metric, only the indirect sign when an AI agent reaches out to your page. - Click-throughs from AI interfaces – when a human follows a citation from ChatGPT, Perplexity, or Bing Copilot and actually visits your site.

These are the only visible events in Plausible or analytics, with referrers such aschat.openai.comorperplexity.ai.

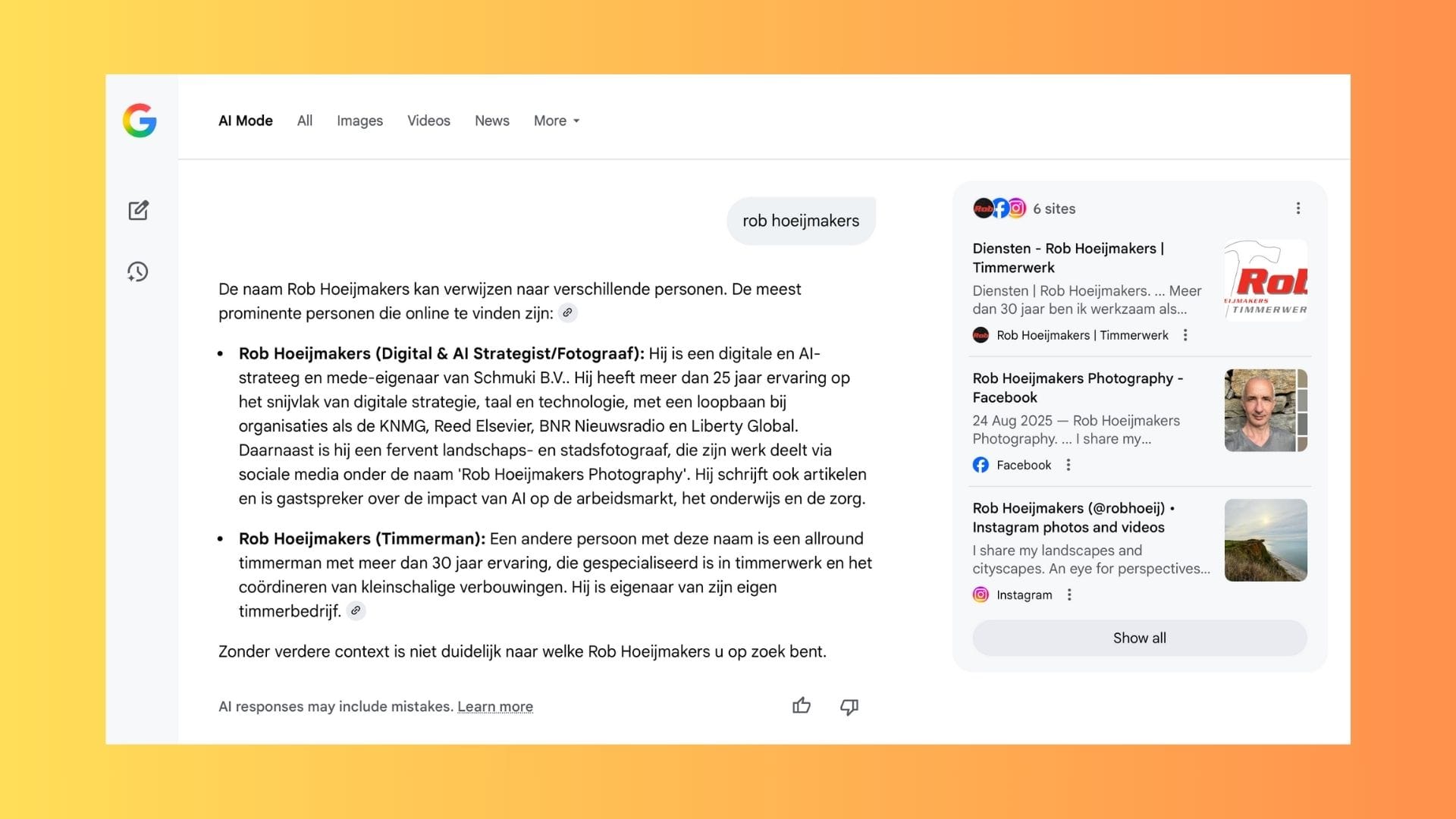

And then there’s Google’s AI Overviews, the most polished black box of them all. We may appear there as sources, but we can’t know how often or in what form. Not even Google Search Console reveals it.

Three new gravity fields

Three new centres of gravity are reshaping how information moves across the web, and each bending attention in its own way, away from the open web and toward enclosed, conversational systems.

1. ChatGPT — the conversation interface

It has quietly absorbed the space once occupied by a thousand blogs and forums.

Users no longer click; they ask. The model responds, often by drawing from sources like ours, but the visit never reaches us. This is traffic without a trace, attention without visitation.

2. Perplexity — the search-dialogue hybrid

A middle ground between ChatGPT and Google.

Perplexity still crawls and cites, and some of that activity shows up in my Cloudflare logs. The volume is smaller, but more transparent: you can see when and what they retrieved. It’s a semi-permeable layer where references still pass through.

3. Google’s AI Overviews — the incumbent transformed

Google remains the web’s largest gateway, yet its AI Overviews are turning search results into compact answers. Our content still fuels them, but from the outside we see only silence, no signal, no metric. It’s the most refined black box: full visibility for users, none for creators.

In my work with organisations that deploy chatbots, the same broader issue returns: the effect on traffic, Google visibility, and a brand’s presence inside AI platforms like ChatGPT.

Chris Schneemann, Head of Sales, Conversed.ai

Flying with new instruments

For the first time, Cloudflare offers a partial radar.

It tells us when AI crawlers train on our content, and when assistants fetch it on behalf of users.

It’s imperfect, only 24 hours of history, no context, but it’s something.

From these traces, we can infer that writing has become a dual act: communicating with humans and maintaining semantic clarity for machines.

Writers are used to measuring attention through pageviews and shares.

Now, we may need to measure resonance through retrievals.

The audience still exists, it’s just reading through an interpreter.

What’s next

I’d like to pick up this conversation with the Plausibles of this world, privacy-minded analytics teams who already think beyond cookies. Could they help us measure AI-mediated attention without reintroducing surveillance.

Could we imagine a new kind of open signal, one that lets site owners know when their work is being read in the model rather than on the web? Should we look towards tools like WordPress and Ghost?

These are still early days, but I sense a new metric emerging:

not sessions, not clicks but citations in the synthetic layer.

In our LinkedIn exchange, Youp van der Graaf, freelance data & CX consultant, noted that optimising a site now means working with two distinct streams of behaviour: human traffic and AI-driven traffic. He also stressed that organisations need a way to bring the most relevant insights from both streams into their decision-making.

Closing reflection

The web hasn’t disappeared; it’s been internalised.

Our words travel further than we can see, carried by systems that read on our behalf. For now, Cloudflare’s dashboard is just a glimmer on the radar, it is faint, but reassuring. At least we know we’re still in the sky.

If you’re exploring the same terrain, or if you work on analytics tools like Plausible, I’d love to compare notes. We may still be flying through cloud, but the outlines of a new map are beginning to appear.