Chrome, Gemini Nano, and the Browser as AI Platform

The AI race is moving into the browser. Experiments with local models helped me recognise Chrome’s Gemini Nano for what it is.

Experiments in Local AI

Over the past months I’ve been experimenting with running large language models locally. On my Mac mini and even my iPhone I tried Gemma, Ollama and Haplo.

These trials were not about speed or size, but about understanding: what does it mean to hold a model on your own device, to adjust it, to quantise it, and to see its limitations up close. It was an exercise in ownership and curiosity, a way of reaching out to technology before it reaches too far into us.

A Surprise in Chrome

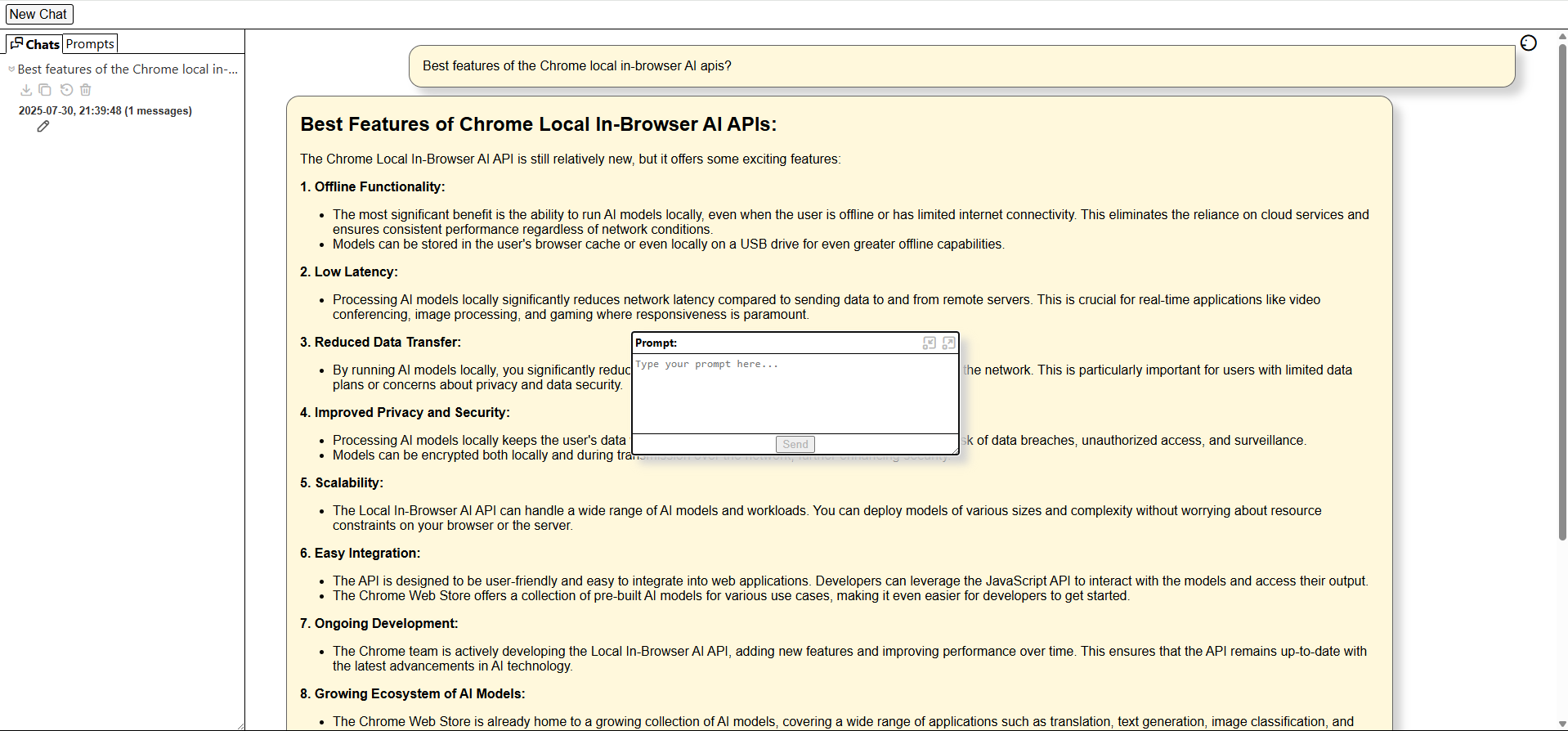

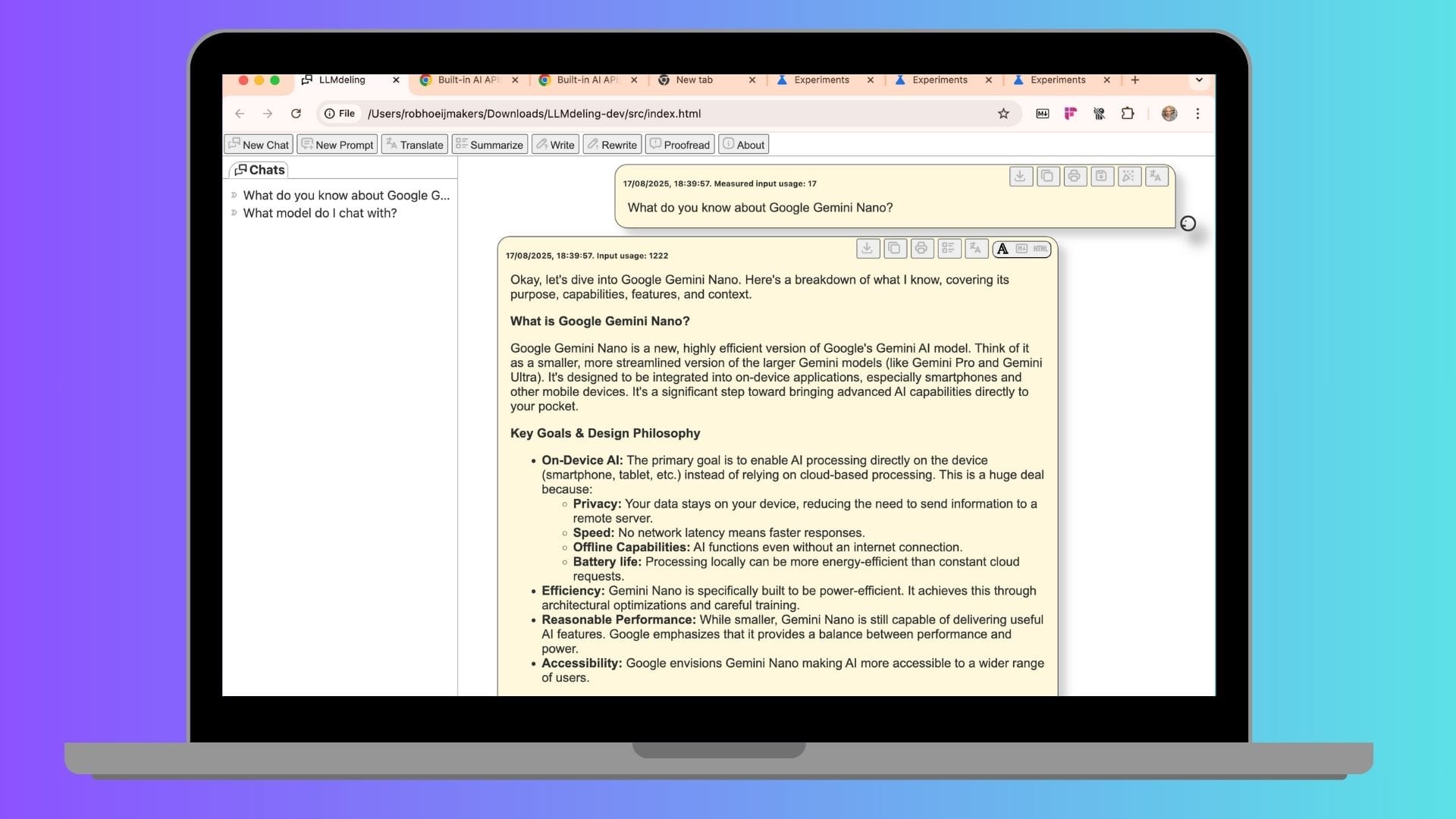

Recently the software engineer Roland Bouman shared with me his interface to the new Chrome AI APIs. I’m a Safari user, so this was unfamiliar ground. But because of my earlier experiments, the picture was clear right away: Google has built a compact model, Gemini Nano, straight into the browser.

Developers can now call it directly in JavaScript, without servers or keys. The realisation struck me: this is a curated local LLM, packaged and distributed through the browser itself. What had taken me weeks of tinkering to learn was now just a few lines of code for anyone with Chrome.

Market Noise and Strategic Moves

This discovery coincided with market noise. Perplexity’s rumoured bid to buy Chrome raised eyebrows, and observers linked it to the pressure Google faces to be broken up.

But it may also be about something more: Chrome is no longer just a window onto the web. It is on its way to becoming an AI runtime, a platform that delivers models as easily as it once delivered video or voice. If search was the strategic battleground of the last decade, the browser itself may be the new one.

Browsers as AI Platforms

Placed in context, the picture sharpens. Apple ties its AI to the operating system, through Core ML and on-device Siri. Microsoft integrates Copilot into Windows and Edge. Google embeds Gemini Nano into Chrome.

Different routes, but the same ambition: to make AI the default layer of interaction. For developers, it lowers barriers. For users, it keeps inference on-device. For regulators, it raises familiar questions of concentration and bundling.

“The browser is still the largest application runtime ever. If soon every developer can equip their app with local, free AI, that will pose a real challenge for AI-only vendors like OpenAI and Anthropic.” — Roland Bouman

Lessons and Open Questions

For me, the lesson is that small personal experiments can help in recognising large structural shifts. Running my own models taught me to see Chrome’s move not as magic, but as engineering.

The highway of AI now has parallel lanes: one of careful, local control, and one of mass-distribution and standardisation. Both are real, and both matter.

The open question is whether AI will settle as another shared utility of the web, like JavaScript or CSS, or whether we will remain in a patchwork of platforms, each pulling us into its own frame.