When Bots Become Readers: Publishing in the Age of AI Crawlers

Listening to Matthew Prince on Azim Azhar’s podcast made me reflect on who actually reads my blog. People (like you), machines, or both.

I listened to a conversation between Azeem Azhar and Matthew Prince, the co-founder of Cloudflare.

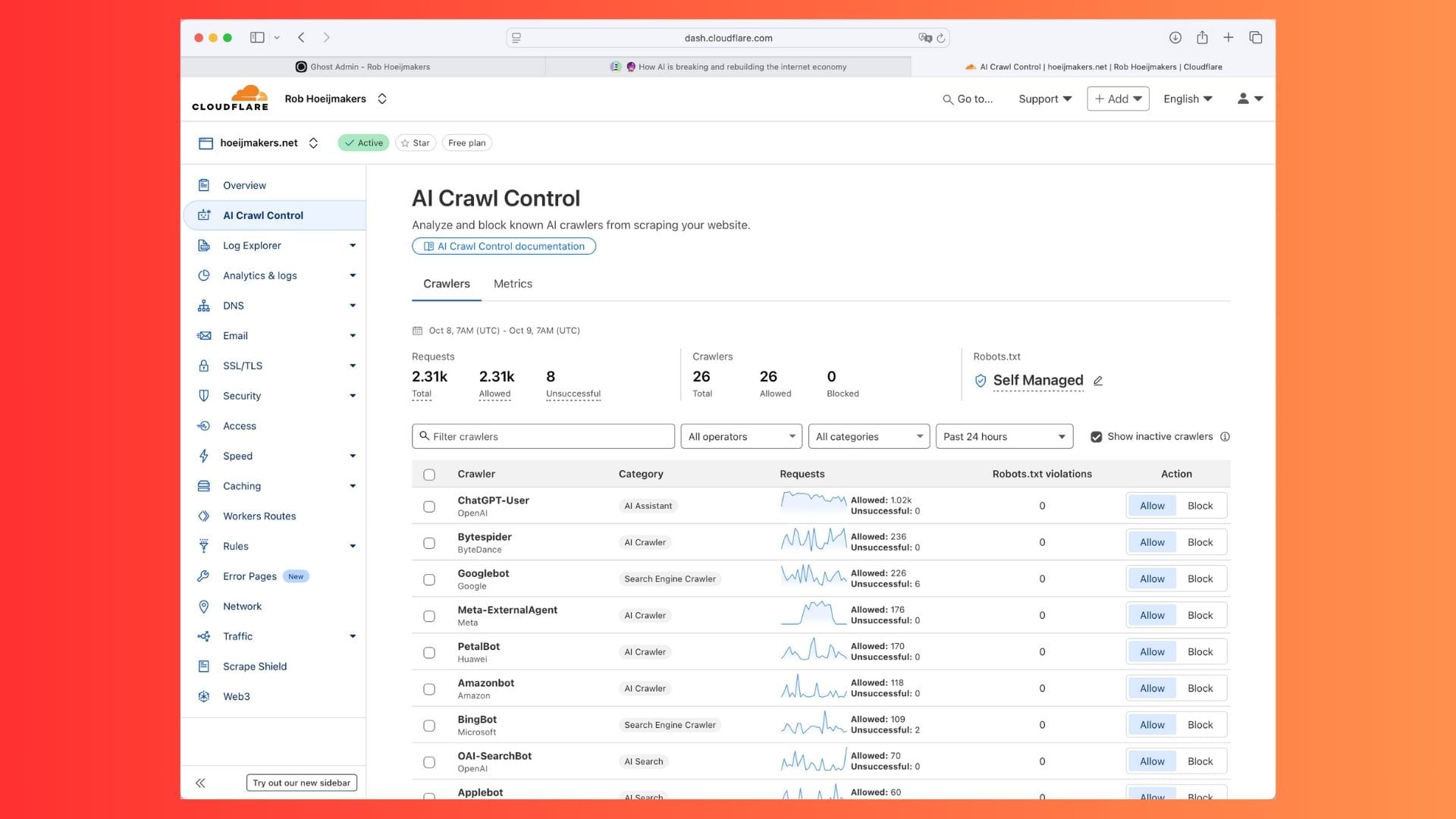

Prince described how a large share of today’s internet traffic no longer comes from people but from machines, these are bots that index, scrape, and simulate human behaviour.

I enjoyed the discussion. It was clear, grounded, and made me look at my own practice differently.

Because I host my Ghost site with Cloudflare as DNS, I realised that every request for my blog also passes through their network. In other words: I, too, publish into a space where machines are part of the audience.

I really enjoyed this episode. Matthew Prince became a tangible person when he said he and his wife own the local newspaper in there hometown.

Quick takeaways

- Much of today’s web traffic is automated and driven by bots that feed search engines, analytics, or AI models.

- Cloudflare’s tools make this visible, and even give you control over which bots reach your content.

- The web’s original exchange , free access in return for visibility and visitors, is eroding as answer engines replace search engines.

- For small publishers, full protection is mostly symbolic; the real shift lies in understanding how your content now circulates.

- Openness can still be a deliberate act, not a default or a weakness.

Seeing who’s really visiting

Cloudflare sits quietly between my site and the world. Every page request passes through its network: caching, securing, accelerating.

It’s a layer I rarely think about, until I open its dashboard and see that many of my “readers” are actually bots.

Some are harmless, these are search crawlers, uptime monitors, link checkers.

Others belong to the new generation of AI systems, scanning text to train models that may soon write answers instead of sending users back to my page.

The changing contract of the web

This used to be the web’s implicit contract: publish something open, get indexed, and in return receive visitors.

Attention was the currency.

Now the exchange feels different. Content is still taken in, but the return flow, traffic, attribution, dialogue, becomes thinner.

The web is less a marketplace of exchange and more a vast input field for machines learning to speak.

Still writing, still designing

And yet, I keep beautifying it.

I choose the fonts, arrange the photographs, edit the light in each image until it fits the tone of the words.

I design a space where thought and craft meet and a place meant to be seen.

It’s strange to realise that many of those who “see” it will never perceive the design at all. The bots take only the HTML; the care, the texture, the human choices disappear in translation.

Still, I publish.

Because somewhere in the mix there are people too, a handful who arrive through social media, or through a link I share in conversation.

And even if not, the site remains a living reference: an archive in motion, a record of how ideas evolve over time.

A deliberate openness

I could block the major AI crawlers. I could fence off the site behind digital hedges.

For now, I don’t.

But awareness itself feels like a form of agency, knowing who (or what) comes knocking, and deciding consciously whether to open the door.

robots.txt, to tell AI crawlers what your site is about and under what conditions they may use your content. Some creators use it to describe their purpose and set boundaries; others see it as a way to shape how machines “see” their work. It’s still early, but the idea fits this moment: we can’t stop the bots, yet we can start speaking their language, on our own terms.Closing

Perhaps this is what authorship now means: creating for an unseen, hybrid audience, part human, part machine, and continuing anyway.

The web may no longer guarantee reciprocity, but it still allows us to shape and share.

I’ll keep doing so, because each page remains a trace of attention, and attention, even unreturned, is still a form of care.