Group Chats in ChatGPT: Solving a Problem That Isn’t There

ChatGPT’s strength has been a focused space to think, explore, and learn. By pushing into group messaging, it risks disturbing that clarity. When an assistant becomes a social venue, not only does the feature struggle to justify itself. It threatens the very environment that made the tool valuable.

New features often promise a glimpse of the future. Sometimes they simply reveal what we value in the present. ChatGPT now offers group chats. On paper it brings collaboration and intelligence into our everyday messaging.

In practice it highlights something more basic: not every conversation wants or needs a machine in the room.

Quick takeaways

- Messaging is already a saturated and socially delicate domain

- Adding an AI participant risks disrupting the human rhythm of interaction

- The current implementation introduces friction rather than value

- A healthier approach would separate models from the social interface

- This is a personal take, based on direct experience

The missing use case

Group messaging apps have settled into clear roles. WhatsApp and iMessage serve intimacy and immediacy. Slack and Teams coordinate work. Discord hosts communities. These spaces feel human because they follow the grain of our conversation: mutual attention, timing, pauses, private humour.

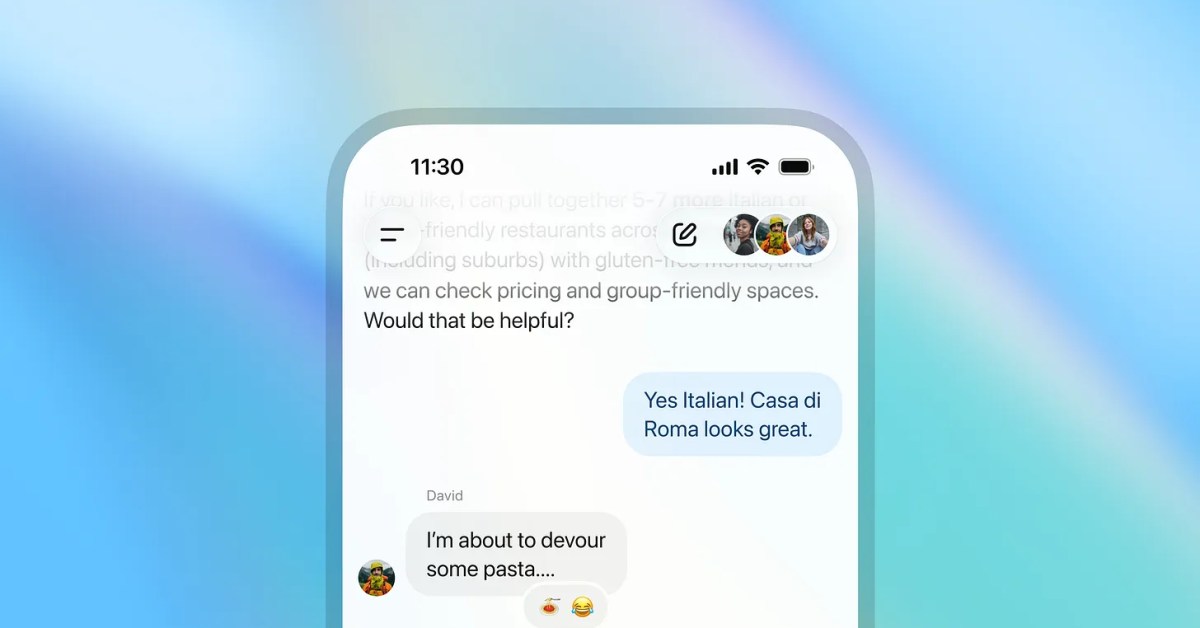

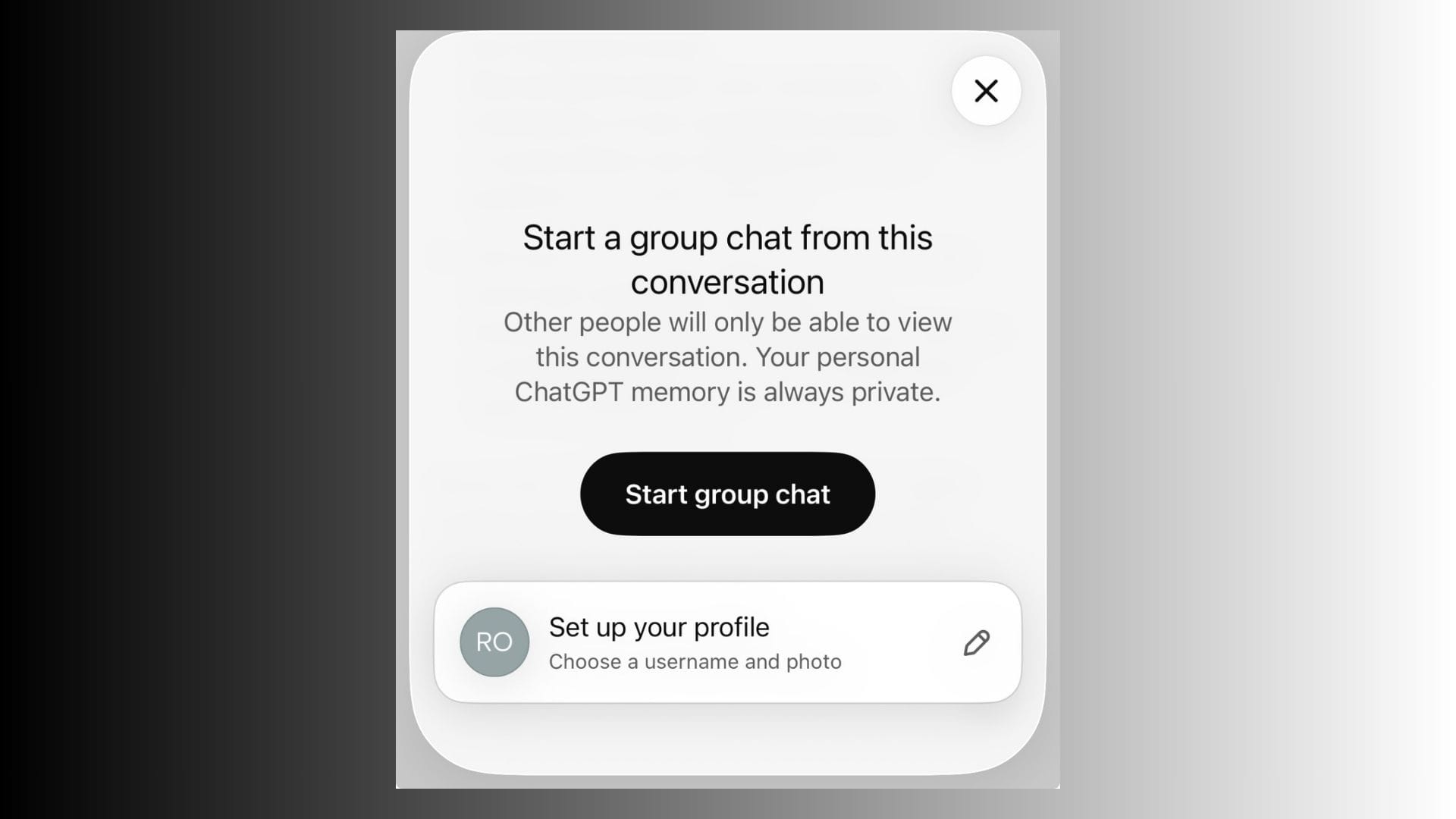

Into this arrives ChatGPT group chat. It is billed as a collaborative experience, but the design assumes a desire that does not feel widespread: moving personal or social conversations into an AI-first environment. When the assistant interjects uninvited, it breaks the tacit norms that keep these conversations comfortable.

Friction at the foundation

The issue is not only conceptual. The underlying mechanics struggle to support the new ambition. Today the feature reveals:

- region restrictions without context

- unclear boundaries between personal and business accounts

- link-sharing that fails across user types

These problems would be minor in a tool that solved a pressing need. Here they become reasons to step away entirely.

Strategy without sociology

There is a broader question: if some collaborators use other models such as Gemini or Claude, why would the shared space be a proprietary chatbot app? Human relationships do not align neatly with vendor ecosystems. Forcing them into one feels artificial.

A more grounded architecture might look like:

- models delivered as utilities through standard APIs

- a memory layer owned and controlled by the user

- interfaces chosen to suit context, not platform strategy

The AI becomes an invisible capability rather than a visible participant trying to be social.

Where this could work

There are places where collaborative intelligence fits naturally. Study groups experimenting together. Teams preparing documents. Shared planning sessions. These are tasks, not chit-chat. In those environments, a bot can enter as a tool requested by the group, not an entity sitting at the table by default.

Closing

This is not a rejection of AI in communication. It is a reminder that our most important conversations rely on trust, timing, and the comfort of speaking freely. Technology can support that, but only if it understands when to stay quiet.